Dec 10, 2025

Privacy in AI Coaching Apps – What Users Need to Know

AI emotional coach privacy matters—understand sensitive data handling, encryption, user consent, and practical risks in WhatsApp coaching apps like Wisdom.

Privacy in AI Coaching Apps – What Users Need to Know

Over 80 percent of American users report concerns about personal data privacy when using AI-powered apps. With so many platforms handling sensitive details from emotional conversations to private goals, digital privacy is no longer just a buzzword. As AI coaching apps continue to shape daily lives, understanding their privacy fundamentals becomes critical for anyone who values control over personal information and safe technology choices.

Table of Contents

Privacy Fundamentals In AI Coaching Apps

Sensitive Data Types And Their Use

How AI Coaches Protect Your Information

Consent, User Rights, And Data Control

Common Privacy Risks And How To Avoid Them

Key Takeaways

Point | Details |

|---|---|

User Consent is Crucial | Clear communication regarding data collection and usage is essential for user trust in AI coaching apps. |

Sensitive Data Management | AI coaching platforms must implement rigorous data protection protocols, including anonymization and secure storage, to safeguard personal information. |

Transparency and Control | Users should prioritize platforms that offer granular privacy controls and transparent data handling policies. |

Proactive Risk Mitigation | Understanding potential privacy risks and actively managing personal data sharing can significantly enhance user security in AI coaching environments. |

Privacy Fundamentals in AI Coaching Apps

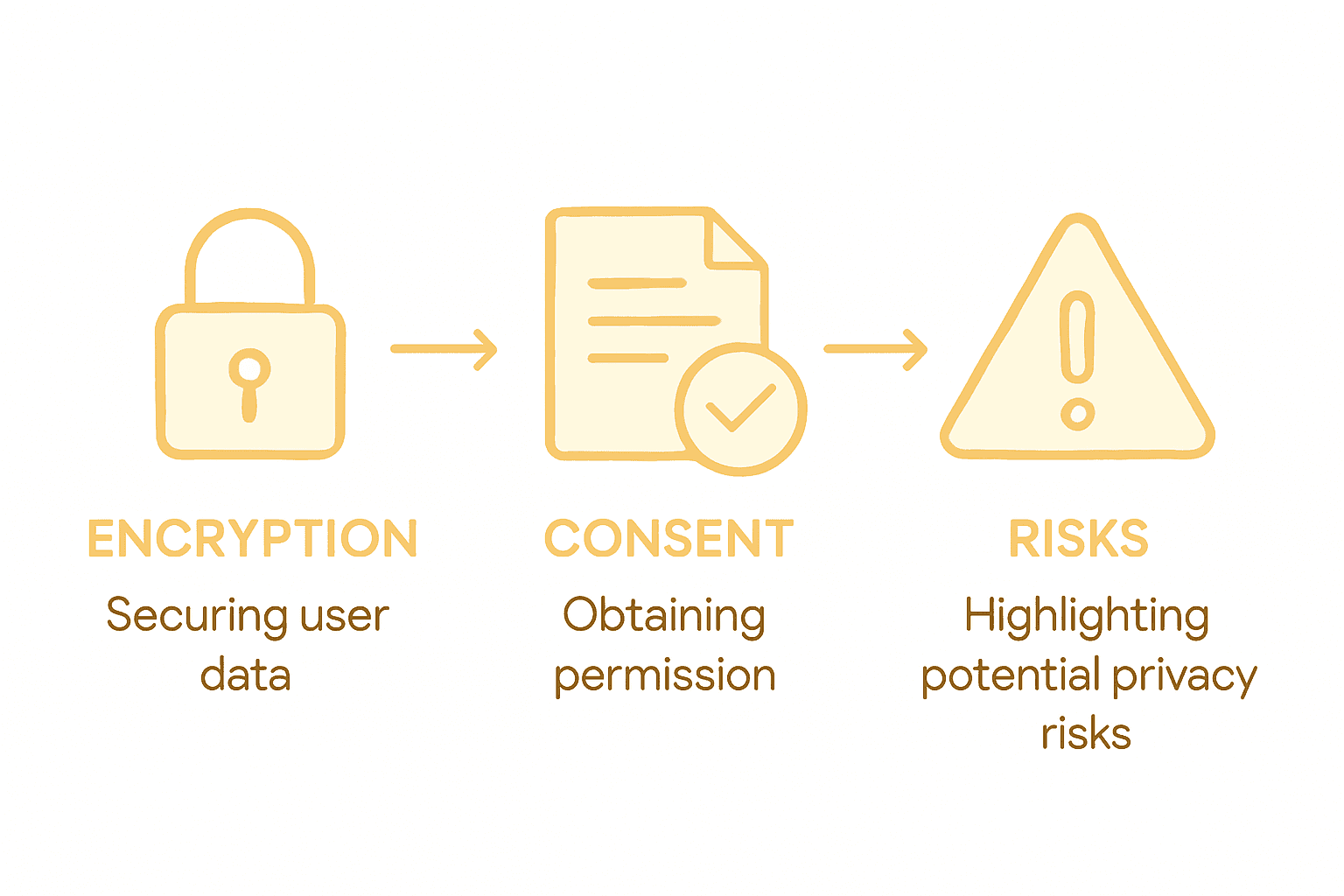

Understanding privacy dynamics in AI coaching apps requires a nuanced approach to personal data protection and ethical technology interactions. Modern users increasingly demand transparency and control over their digital interactions, especially when engaging with platforms that process sensitive emotional and relationship information. Privacy considerations in AI coaching systems fundamentally revolve around three core principles: information transparency, user autonomy, and robust data protection mechanisms.

The core privacy landscape for AI coaching applications involves multiple critical dimensions. User consent becomes paramount, requiring clear communication about what data is collected, how it will be used, and the specific purposes of data processing. AI coaching platforms must implement granular privacy controls that allow users to understand and modify their data sharing preferences. This approach aligns with emerging Privacy-Ethics Alignment in AI frameworks that emphasize stakeholder-driven privacy protections.

Key privacy fundamentals users should evaluate when selecting an AI coaching app include:

End-to-end encryption for all conversations

Clear data retention and deletion policies

Anonymization of personal identifiable information

Transparent algorithmic decision-making processes

User-controlled data sharing permissions

Regular third-party privacy audits

While technological safeguards are essential, user awareness remains the most critical privacy defense. Understanding how AI coaching apps handle personal information empowers individuals to make informed choices about their digital interactions. By prioritizing platforms that demonstrate genuine commitment to ethical data practices, users can enjoy personalized coaching experiences without compromising their fundamental right to digital privacy.

Sensitive Data Types and Their Use

AI coaching apps navigate complex terrain when handling sensitive personal data, requiring sophisticated approaches to information management and protection. These platforms typically collect multiple layers of personal information ranging from basic demographic details to intricate emotional and behavioral insights. Sensitive data types in AI coaching environments encompass far more than traditional personal identifiers, including nuanced psychological and interpersonal communication patterns.

The primary categories of sensitive data collected by AI coaching platforms include emotional response patterns, communication history, relationship dynamics, personal goals, and psychological assessment information. Risks associated with AI-powered data collection highlight the potential for creating extensive shadow datasets that could potentially expose users to unintended privacy breaches. This underscores the critical importance of implementing robust data anonymization and protection strategies.

Key sensitive data types users should be aware of include:

Emotional communication transcripts

Relationship interaction histories

Personal goal tracking information

Psychological assessment responses

Behavioral prediction models

Contextual communication metadata

Responsible AI coaching platforms must implement rigorous data protection protocols that go beyond basic anonymization. This involves not just hiding personal identifiers, but creating comprehensive data governance frameworks that respect user privacy while maintaining the platform’s ability to provide personalized coaching insights. By establishing clear boundaries and transparent data usage policies, these platforms can build trust and ensure users feel secure sharing their most intimate personal information.

How AI Coaches Protect Your Information

Protecting user information represents a complex and multifaceted challenge for AI coaching platforms, requiring sophisticated technological and ethical approaches to data security. Modern AI coaching systems implement multiple layers of protection designed to safeguard users’ most sensitive personal and emotional information. These protection mechanisms go far beyond traditional data encryption, incorporating advanced strategies that prioritize user privacy and data integrity.

Localized AI chatbot technologies offer a groundbreaking approach to ensuring absolute client confidentiality by running AI models entirely offline. This method provides users with unprecedented control over their personal data, preventing potential external breaches and unauthorized information access. Data protection strategies in AI coaching apps typically involve a comprehensive framework that includes multiple defensive layers.

Key protective mechanisms employed by responsible AI coaching platforms include:

End-to-end encryption for all communication channels

Anonymization of personally identifiable information

Secure, isolated data storage systems

Regular independent security audits

Transparent data handling policies

User-controlled data retention and deletion options

The most advanced AI coaching platforms recognize that protecting user information is not just a technical challenge, but a fundamental ethical responsibility. By implementing robust security protocols, providing clear transparency about data usage, and giving users granular control over their personal information, these platforms build trust and create a safe environment for personal growth and emotional exploration. The goal extends beyond mere data protection to creating a secure, confidential space where users can engage authentically without fear of compromising their privacy.

Consent, User Rights, and Data Control

Consent and user rights form the cornerstone of ethical data management in AI coaching platforms, establishing a critical framework for protecting individual privacy and autonomy. Modern users demand more than passive data protection they want active control and transparent management of their personal information. User expectations for information control highlight the critical importance of providing granular consent mechanisms that empower individuals to understand and direct how their data is interpreted and shared.

Comprehensive user rights in AI coaching environments extend far beyond traditional privacy policies. Stakeholder-driven privacy frameworks emphasize the need for flexible, personalized approaches that accommodate diverse user needs and expectations. This means creating dynamic consent models that allow users to modify their data sharing preferences in real time, with clear, understandable interfaces that demystify complex data management processes.

Key user rights and data control mechanisms include:

Explicit consent for each type of data collection

Granular permissions for data usage

Real-time data access and modification

Comprehensive data deletion options

Transparent algorithmic decision-making explanations

Opt-out capabilities for specific data processing

Ultimately, responsible AI coaching platforms recognize that user consent is not a one-time checkbox, but an ongoing dialogue. By providing clear, accessible information about data practices, offering intuitive control mechanisms, and maintaining absolute transparency, these platforms can build trust and empower users to engage confidently in their personal growth journeys. The goal is creating a collaborative environment where users feel fully informed, respected, and in control of their personal information.

Common Privacy Risks and How to Avoid Them

AI coaching platforms present unique privacy challenges that require users to be proactively vigilant about their personal information. Security vulnerabilities in AI-based mobile applications underscore the critical need for users to understand and mitigate potential data exposure risks. These platforms collect extensive personal and emotional data, creating multiple potential points of vulnerability that users must carefully navigate.

Privacy risks in AI coaching environments extend beyond traditional data breaches, encompassing complex challenges related to algorithmic transparency and data interpretation. Interconnected privacy and fairness challenges reveal that protecting personal information involves more than just preventing unauthorized access it requires ensuring ethical and responsible data management across multiple dimensions.

Key privacy risks users should be aware of include:

Unintended data inference and profiling

Potential algorithmic bias

Unauthorized data sharing between platforms

Inadequate anonymization techniques

Long-term data retention without clear purpose

Potential re-identification through metadata

Mitigating these risks requires a proactive, multilayered approach. Users must carefully review privacy policies, utilize granular privacy settings, limit shared personal information, and regularly audit their data footprint. By maintaining awareness, asking critical questions about data usage, and selecting platforms with robust privacy frameworks, individuals can significantly reduce their exposure to potential privacy vulnerabilities while still benefiting from the transformative potential of AI coaching technologies.

Experience Privacy-First AI Coaching on WhatsApp

Navigating privacy concerns in AI coaching apps is challenging but essential. You want emotional guidance that respects your data with transparent consent, end-to-end encryption, and real-time control over how your personal information is used. If avoiding hidden data sharing, unwanted profiling, and opaque algorithmic decisions matters to you, then securing a coach that truly honors your privacy is critical

Discover how the Wisdom App delivers unmatched privacy protection by keeping your conversations securely inside WhatsApp, powered by superintelligent AI that respects your data rights. Wisdom offers live emotion detection, relationship superintelligence, and data-driven insights with privacy-first infrastructure hosted on AWS. Take control over your emotional growth without compromising confidentiality. Start your personalized coaching journey now by signing up at Wisdom App Sign In and take the first step toward smarter, safer self-improvement today.

Frequently Asked Questions

What types of personal data do AI coaching apps collect?

AI coaching apps typically collect various types of personal data, including emotional communication transcripts, relationship interaction histories, personal goal tracking information, psychological assessment responses, and behavioral prediction models.

How can I ensure my data is protected when using an AI coaching app?

To ensure your data is protected, look for apps that offer end-to-end encryption, clear data retention and deletion policies, anonymization of personally identifiable information, and user-controlled data sharing permissions.

What user rights should I expect from AI coaching platforms regarding my personal information?

Users should expect explicit consent for data collection, granular permissions for data usage, real-time access and modification of their data, comprehensive deletion options, and transparent explanations of algorithmic decision-making.

What are common privacy risks associated with using AI coaching apps?

Common privacy risks include unintended data inference and profiling, potential algorithmic bias, unauthorized data sharing between platforms, inadequate anonymization techniques, long-term data retention without clear purposes, and potential re-identification through metadata.

Recommended